How to Turn LLM Noise into Brand Strategy Using Entities and Citations

Talking to LLMs about your brand can sometimes feel like talking to a drunk grandparent at a wedding: unreliable, exaggerated, and full of stories that change every time you ask. One minute your brand is a rising star, the next it’s completely forgotten. It’s chaotic, and you’d be forgiven for thinking: how can I possibly use these insights to make serious decisions?

But here’s the thing – just like that grandparent, the wisdom is in there. You just need the right way to listen.

Tl;dr

This blog breaks down two powerful methods for turning messy LLM responses into actionable brand strategy:

Entities the core topics LLMs associate with your brand

Citations the sources they reference when talking about you or your competitors

Here’s what you need to know:

- LLM responses are inconsistent by design, but there’s value in the chaos. The data insights you can understand are qualitative, not quantitative. Meaning you must put in place much more flexible methodologies to get real insights.

- Entity tracking is your foundation. Don’t look for keywords look for repeated mentions of core concepts. These topic averages reveal how your brand is understood in the AI space.

- Citations expose trust. LLMs repeatedly reference certain domains. Knowing which sites they trust (and how often) is essential for your own AI visibility.

- Commercial vs. knowledge prompts matter.

- Commercial prompts (e.g. “best SEO tools”) surface brands and trusted industry sources.

- Knowledge prompts (e.g. “what do you know about Brand X?”) reveal how a brand is described and contextualised and by who.

- Tracking entity consistency and citation frequency over time, helps you see the full picture and act on it.

If you want to future-proof your SEO and own your AI visibility, this is where you start.

The Shift from Quantitative to Qualitative Analysis

If you ask an LLM about your brand three times in a row, you’ll get three different answers. For SEOs and brand managers used to tidy, trackable SERPs, this feels completely broken. In the world we’ve come from – the world of rankings, impressions, click-through rates – consistency has always been queen. If something changes, it’s measurable, and usually for a reason.

But that’s not how LLMs work. The shift we’re in right now is from quantitative SEO (number centric!) to qualitative AI optimisation (feeling/sentiment centric).

Large language models don’t rely on quantitative rankings. They generate responses based on qualitative probabilities – pulling from a vast training dataset, influenced by context, sentiment, and what they’ve “learned” to associate. Asking the same thing twice doesn’t give you clarity; it gives you chaos. But that chaos isn’t random, and if you learn to track it correctly then you can get numerical insights.

We’re not just measuring what ranks – we’re interpreting how AI understands a brand. It’s less about numbers in a dashboard, more about patterns in meaning. So yes, LLM data feels inconsistent at first. But if you track it properly over time, across multiple prompts with structured tools, those inconsistencies average out into something much more useful: a story of your brand’s presence in the AI space.

Let’s first dive into the starting place for your qualitative analysis strategy: entity analysis.

Part 1: How to Use Entity Data From LLMs

Entities are underlying topics. They are the data that fuels LLMs. They are not keywords in isolation, but concepts that can be retrieved from a huge dataset. Here’ a cool post to help with comprehension if you’re brand new to entities.

A Hypothetical Introduction

The only way to get real data into what ai knows about you it to leverage entities. You need to prompt ai consistently for at least a month and have a way to take what it says and break that up into entities. Let’s take an example here. I’m going to prompt a theoretical LLM with this query.

“What do you know about Genie Jones?”

On the first day it may respond with

Day 1:

“Genie Jones is a tall ginger weirdo who conquers SEO by day, owns a dog named Rupert, and somehow manages to be the last one standing (usually drunkenly) at every SEO conference. Also, she’s known for vanishing into the woods for epic nature walks where she communes with squirrels.”

Day 2:

“Genie is a fiery-haired SEO wizard who casts keyword spells while hiking rugged trails with Rupert, her trusty sidekick dog. At industry meetups, she’s infamous for her karaoke renditions of ‘Eye of the Tiger’ and once accidentally live-tweeted her own coffee spill.”

Day 3:

“Genie Jones is a redheaded SEO sorceress who hosts midnight poetry slam battles, rides a unicycle through vintage record shops, and shares her lair with Luna — a mischievous cat who occasionally hacks websites for fun.”

Despite the madness, some core entities keep popping up like clockwork through lots of different words:

- Person: Genie Jones

- Hair Color: Ginger/redhead

- Profession: SEO expert/wizard/sorceress/ weirdo?

- Pets: Dog Rupert (Day 1 & 2), plus cat Luna (Day 3) – an example of where the AI might go wrong?

- Hobbies/Traits: Nature walks/communes with squirrels, karaoke mishaps, midnight poetry slams, unicycling, vintage records, and website hacking cats

If we do this every single day for 30 days, we will start to get some serious pattern recognition insights into what is being said about me, and how frequently.

| Entity | Mentions in 30 Days | Notes |

| Genie Jones (Person) | 90 | Core brand identity |

| Ginger/Red Hair | 50 | Consistently mentioned hair color |

| SEO / SEO Expert | 70 | Primary profession |

| Rupert (Dog) | 60 | Loyal dog, frequently mentioned |

| Nature Walks / Hiking | 40 | Common hobby |

| Tall | 45 | Physical trait consistently noted |

| Drunk/Loud at Events | 35 | Social trait at SEO conferences |

| Karaoke Mishaps | 5 | Fun, quirky but rare mention |

| Midnight Poetry | 3 | Wacky, niche detail |

| Luna (Cat) | 2 | Likely hallucination / rare mention |

| Unicycling | 1 | Very rare, probably hallucinated |

| Squirrels | 1 | Weird hallucination, very low count |

| Website Hacking Cats | 1 | Purely bizarre hallucination |

Now look – we are making some sense out of all the madness! Finally, some numbers you can start to take to your boss! WOOHOO! If these are the types of insights I can make up about myself, the real thing is going to be impressive.

A Real-world Example

Let’s do the same thing now but use Waikay.io to understand how multiple brands are being talked about in terms of the same prompt. The same method applies, but it’s collecting information on which brands are being talked about, and how they are being talked about. The collection of this data may look a little like this!

How to turn Entity Data into Brand Strategy

The heatmap visualises entity data collected from LLM responses over the past 30 days and it does something most tools can’t: it strips away keywords and surfaces the underlying concepts the AI associates with your brand.

Take InLinks as an example. From one of our prompts, we saw InLinks mentioned 261 times in relation to the entity “topics,” but only 42 times in the context of “keywords.” That’s not a failure, it’s a signal. InLinks isn’t a keyword tool, so lower consistency in that area aligns perfectly with our actual proposition. What matters is that we’re being mentioned at all in that context it shows up as a weak signal, but a signal, nonetheless. That’s the power of entity averages. It’s not about showing up everywhere, it’s about showing up in the right contexts with consistency over time.

So, once we had a 30-day snapshot of entity mentions across multiple prompts, we could clearly see where we were falling short. Despite being an internal linking tool, the entities “linking” and “tool” were both underrepresented.

Why? Because we didn’t have a clearly defined product page for “internal linking tool.” The AI couldn’t easily anchor that concept to us and that’s a critical gap we would’ve missed without this data.

This is exactly the kind of insight that drives practical content changes not surface-level keyword tweaks, but foundational adjustments to how your brand is positioned in the language AI understands.

This is a workflow any brand, in any industry, can (and should) use. You won’t get clarity overnight and frankly, if a tool tells you otherwise, it’s selling fiction.

But if you commit to consistent prompting, track entities over time, and analyse the averages, you’ll uncover how LLMs are constructing your brand story and where you need to step in and shape it.

That’s the future of AI optimisation and we hope to lead it.

Part 2: How to Use Citation Data From LLMs

Now let’s talk about source data the links and citations LLMs claim to base their answers on.

Think back to my earlier example to LLMs being like a grandparent at a wedding. They’ll confidently tell you where they heard a story – “Oh, that was definitely from Jim… or the news… or maybe it was that weird dream I had in ‘84.” That’s how citations work in language models. You’ll get a source, but whether it’s real, reliable, or even exists? That’s another story.

This is why tracking source consistency matters just as much as tracking entities. LLMs will often include links in their replies, but they’re not grounded in traditional citation logic. Sometimes they cite credible domains repeatedly. Sometimes they hallucinate entire URLs. Sometimes they mash together fragments of two different sites and give you a source that doesn’t exist. But over time, patterns emerge – and those patterns are gold.

The Two Types of Prompts and the Citation they Unlock

If you want to understand how LLMs “see” your brand and where they’re pulling that information from, you need to pay close attention to the sources they cite and crucially, the kinds of prompts that trigger them.

There are two primary types of prompts to use in AI optimisation:

commercial prompts (e.g. “What are the best brands for holiday booking?”) and

knowledge-based prompts (e.g. “What do you know about Brand X?”).

They each expose different types of source-level insight and that distinction is where the real value lives.

Commercial Prompts Surface the Power Domains

Commercial prompts are focused on brand discovery. These are the questions that simulate how people ask for options, recommendations, and rankings.

When an LLM responds, it doesn’t just give you a list of brands it often tries to justify that list with citations. And those citations are a map of what the AI considers authoritative in your space. (at least they are if you look at them overtime!)

You’ll typically see:

- Review aggregators

- High-authority blogs or tool comparison sites

- Industry publications

- Trusted commercial content (e.g. Zapier, Backlinko, etc.)

This is how you figure out which domains the LLM relies on to form brand opinions. If you’re not being mentioned, or if your competitors are getting cited alongside known trusted sources, that’s your signal: go earn that citation, placement, or feature.

Knowledge Prompts Reveal the Narrative Framework

On the other hand, knowledge-based prompts return more detailed and descriptive information about a single brand. And the sources attached to these replies are often different in both type and tone.

Expect to see:

- The brand’s own website and subdomains

- Editorial content explaining the brand’s background or values

- User forums, interviews, blog posts contextual data that shapes tone and personality

These sources may not have the raw domain authority of those in commercial prompts, but they give your insight into how the LLM stitches together your brand narrative and what surrounding context it pulls in to support that.

You’ll also see hallucinations here more frequently made-up URLs, half-correct citations, or citations to outdated content. But even hallucinations are a kind of insight: they show where the LLM expects information to live, even if it doesn’t exist yet.

From our data, one source stood out: backlink.io. It showed up again and again across multiple prompts related to the best SEO tools. Whether the LLM was accurate in every case or not isn’t the point – the pattern of trust is.

How to Turn Source Data into Brand Strategy

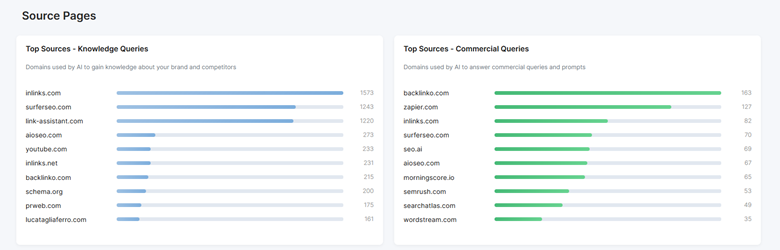

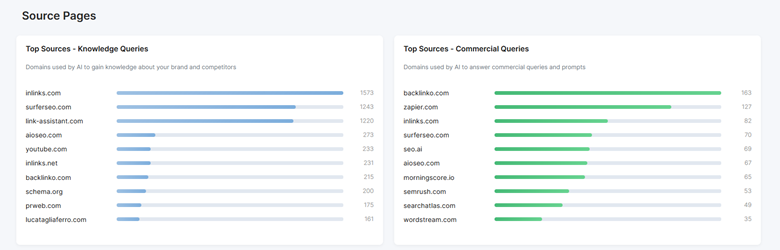

The chart below was taken after 30 days of sources collection, highlights a powerful takeaway: Backlink.io is consistently cited by LLMs – across both commercial prompts and knowledge-based queries. That’s not a coincidence, and it’s not just a one-off. It’s a signal.

This dual presence tells us two critical things:

- LLMs treat Backlinko as a high-authority source – both when asked for brand rankings and when explaining individual brand details.

- Its structure, format, and content are working incredibly well for AI visibility.

Backlinko is, essentially, a “known good” source in the eyes of the model – and that gives it enormous influence over who shows up and why. If you’re not already appearing on, near, or in comparison with domains like Backlinko for SEO/internal linking tool niches, you’re outside the AI trust loop. And that loop heavily informs which brands get surfaced in results.

This insight reshapes your next moves:

- Get featured on domains LLMs already trust. Placement on Backlink.io could materially increase your LLM visibility because it’s already wired into the model’s mental map.

- Study their structure. Sites that rank well in LLM citations tend to have highly structured, segmented, and crawlable content. It’s not just the backlinks; it’s the clarity and format of the information.

- Use this to model your own presence. Being in the same citation neighbourhood as these sources boosts your chance of being included, recognised, and ranked.

This is exactly the kind of AI-first insight most brands are still sleeping on. But if you want to lead in LLM visibility, you need to know what the model trusts and make sure you’re standing right next to it.

In Conclusion

Start big with your data. Don’t be afraid to take a step back and understand what your brand is through trends. Citations and entity recognition is incredibly useful data, despite it not being an exact science, so make sure you have the tools in place to collect this data overtime.

[Written by Genie Jones and Reviewed and edited by Dixon Jones]