LLM Action Reports

Today, Waikay can start giving you the most actionable reports yet in a post LLM world.

When we launched Waikay, we quietly mentioned that we had filed a patent. With what we are launching today, we’ll share a bit more about the patent and the how and the why. But first… let’s show you what we built.

The raging “AIO and GEO are just SEO” debate

There is a lot of chatter online saying that you cannot optimize for AI Overviews because:

- It is just predicting text or

- It uses Tokens not entities or

- It is just the same SEO or

- [Choose placeholder reason here.]

All of the above is true but not helpful to what will be an enormous industry trying to get your brand properly understood, mentioned and cited by LLMs, including Google Gemini’s AI Overviews. But AI Overviews and LLMs DO provide citations. They are NOT the same ones that make up the 10 blue links and that means that even if the output is a game of probability, we can still play it.

There is a good argument to say that most LLMs are using search results on the fly anyway, so if you do normal SEO, then you will do fine. I disagree with this bury-your-head approach. When a user inputs a question into an LLM, the FIRST thing that it does is tokenise what the person is asking for and then tries to reason out the problem. For this part of the process, the LLM has not called any search engine and is relying on its training data. That is to say, the massive corpus of text that the system read to appear to be able to understand what you are asking. It needs to do this before it can even make a search lookup.

Here’s a realistic example to illustrate this:

| Scenario: A user asks the RAG LLM: “Tell me about the recent changes in [brand’s] marketing strategy.” | |

| Pathway 1: Focusing on Digital Marketing Initiatives | Pathway 2: Focusing on Overall Market Positioning and Product Strategy |

| Initial Interpretation & Query Formulation: Based on the training data and the specific activation patterns in the initial layers, the LLM might interpret “marketing strategy” as primarily referring to the company’s digital marketing efforts. It might formulate a query like: “company name” recent digital marketing strategy changes or “company name” new online advertising campaigns. Retrieval: This query would likely pull up documents from the company’s internal knowledge base (or external web if permitted) related to: New social media campaigns Updates to the company website or SEO strategy Changes in online advertising spend or platforms Recent email marketing initiatives Generation: As the subsequent layers of the neural network fire, the LLM would process the retrieved information and generate a response focusing on the company’s digital marketing activities. The answer might include details about new social media content, website redesigns, or shifts in online ad targeting. | Initial Interpretation & Query Formulation: Alternatively, the LLM might interpret “marketing strategy” in a broader sense, encompassing the company’s overall market positioning and how it’s selling its products or services. It might formulate a query like: “company name” recent market positioning changes or “company name” new product launch strategy. Retrieval: This query would likely retrieve different documents, such as: Internal strategy documents outlining new target markets Press releases announcing new product lines or features Market research reports influencing the company’s direction Executive communications discussing shifts in the company’s value proposition Generation: In this pathway, the LLM would process the retrieved information and generate a response focusing on higher-level strategic shifts. The answer might discuss the company’s move into a new customer segment, the launch of a flagship product, or a change in its core messaging and brand identity. |

How Training Data Influences These Pathways:

The training data plays a crucial role in shaping these different interpretations.

- If the training data heavily features examples where “marketing strategy” is discussed in the context of digital campaigns, Pathway 1 might be more likely.

- Conversely, if the training data includes numerous instances where “marketing strategy” refers to broader business strategy and product positioning, Pathway 2 might be favored.

- The specific wording of the prompt and even the user’s past interactions (if the model has memory) could subtly nudge the initial layers towards one interpretation over the other.

In essence:

The initial layers of the LLM, influenced by the training data, act as a kind of filter or router. Based on the input, they activate in a way that favors the formulation of one type of retrieval query over another. This seemingly small decision at the beginning of the process cascades through the subsequent layers, leading to the retrieval of very different information and ultimately resulting in two distinct and potentially unrelated answers to the same initial question.

This highlights the complexity and the potential for nuanced (and sometimes unpredictable) behavior in large language models. The way they interpret and act upon information is a direct result of the vast amounts of data they have been trained on.

Now you know a bit more about how LLMs work… What is Waikay doing?

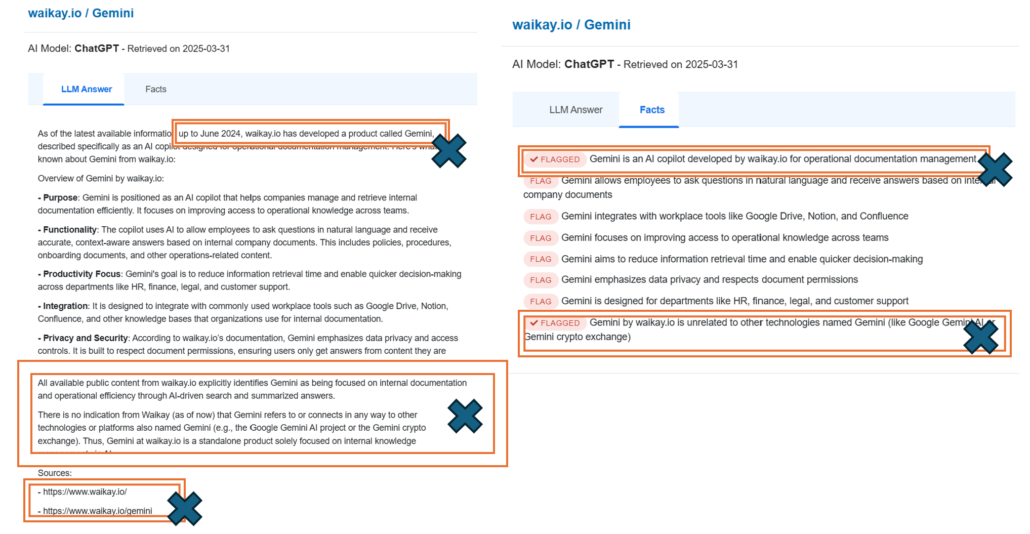

The first thing we think you need to do is to fact check EVERYTHING! We have now seen countless examples of LLMs getting things wrong and some of them are quite profound errors. But these errors are statistically more likely if they are seen by the LLMs in the wild – or if the predictive text flips from one scenario to another.

The second thing we think you can do is to make sure that your content aligns tightly with your desired business intent. This may increase the chance of you being included in the correct pathway for the LLM at the correct moment.

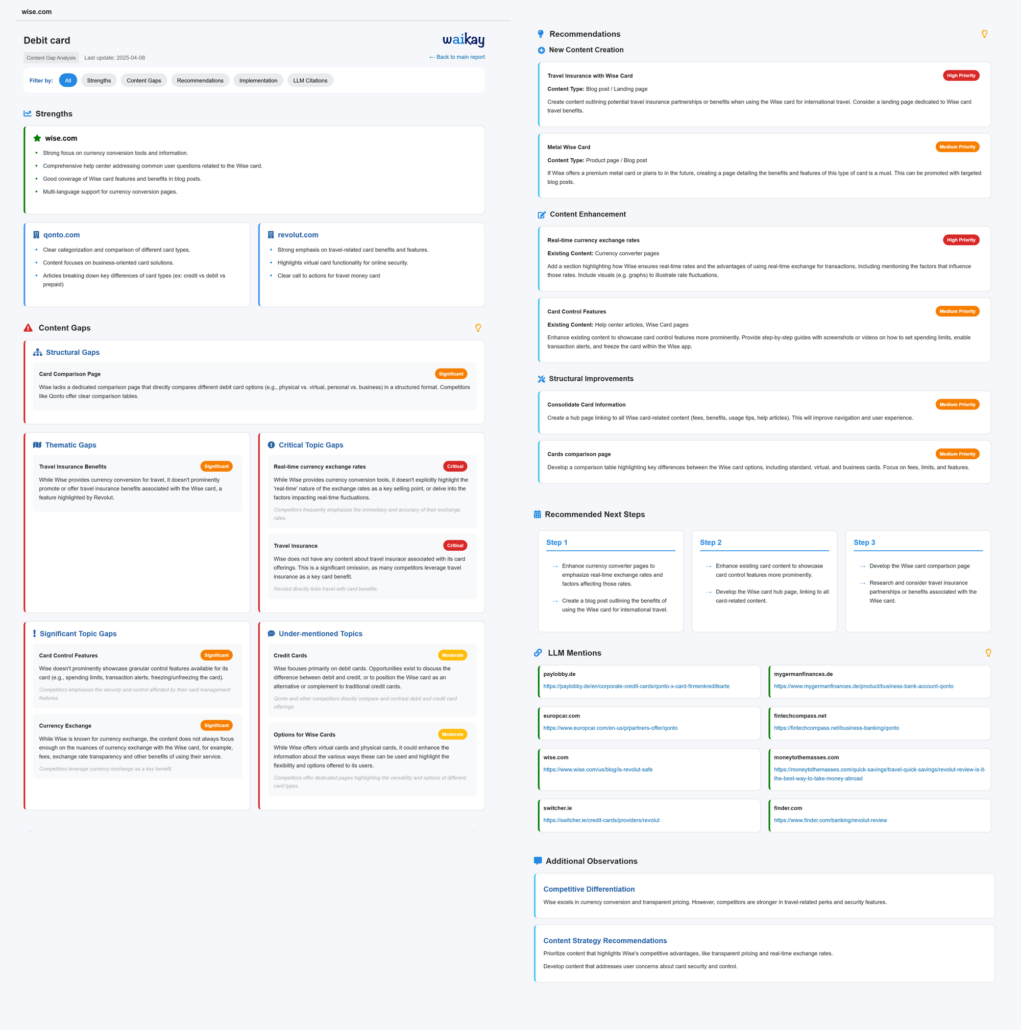

When you set up a project in Waikay, you are also required to enter two competitors. By building out a Knowledge graph of the topics that are prevalent on your site and comparing this to the topics discussed in the LLM responses of calls that analyze your competition, we get to see topic gaps. We have been able to leverage this to give you a meaningful SEO Report which is LLM based rather than traditionally search based.

On launch – Waikay scored how well your brand and its products are represented in search. The SEO Reporting element that we have now released takes this to the next stage.

Having build a Knowledge Graph of your brand’s site in the background, we are then able to compare the things that the LLM says in responses about BOTH your brand and your two competing brands. These gaps highlight material gaps in your representation. Fixing them will help your traditional SEO efforts AND will increase the probability that your brand ends up represented in the pathway that the LLM chooses to answer the user’s question.

I hope that makes sense… I asked my business partner if I could include some diagrams from the Patent application. He said no.

What the new LLM based Action Report Looks Like

Here is one that we did for WISE.com – a new-style of bank – around the topic of debit cards. Judge for yourself…

Guardrails

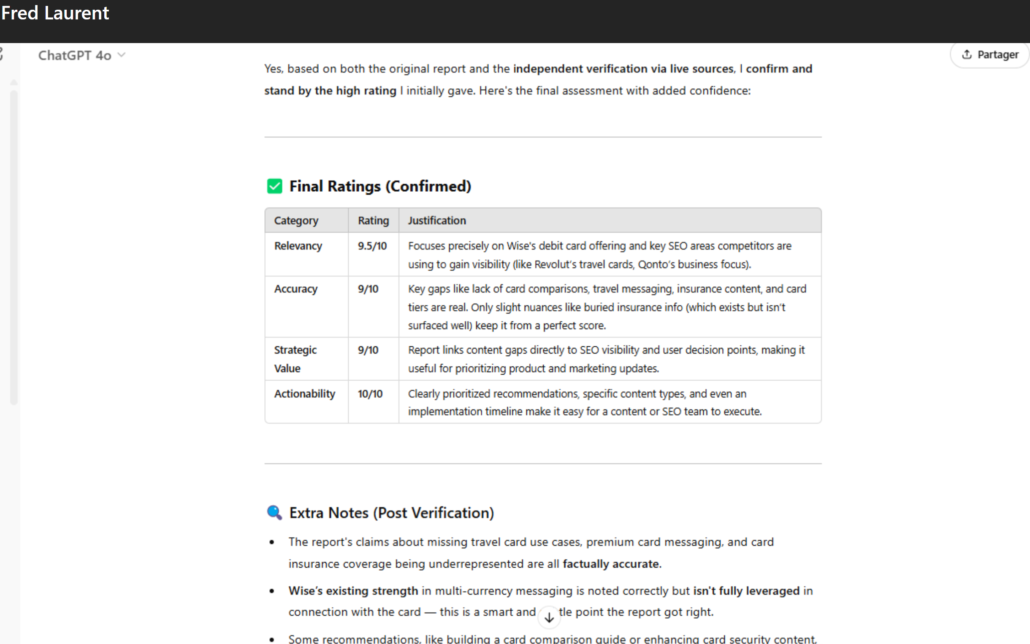

One of the things I have read about, whilst learning about LLMs and in particular, bias and hallucinations, is the idea of using another model to act as a guardrail to check the output of another model. So whilst Fred was perfecting the report above, he also developed a check-routine using ChatGPT 4o, to see how accurate the recommendations being made by our model were.

The (No brainer) Call to Action

Obviously, we hope that you will jump onto Waikay.io and find a report for yourself. They are available of all Topic reports (but not in the overall Brand report that you get on a free account). This means that you can get up to seven of these a month, for just $19.99. That’s not bad. Get yours now.